Let me start by qualifying the title with the obligatory phrase in some situations. TL;DR – it was probably too slow to start with.

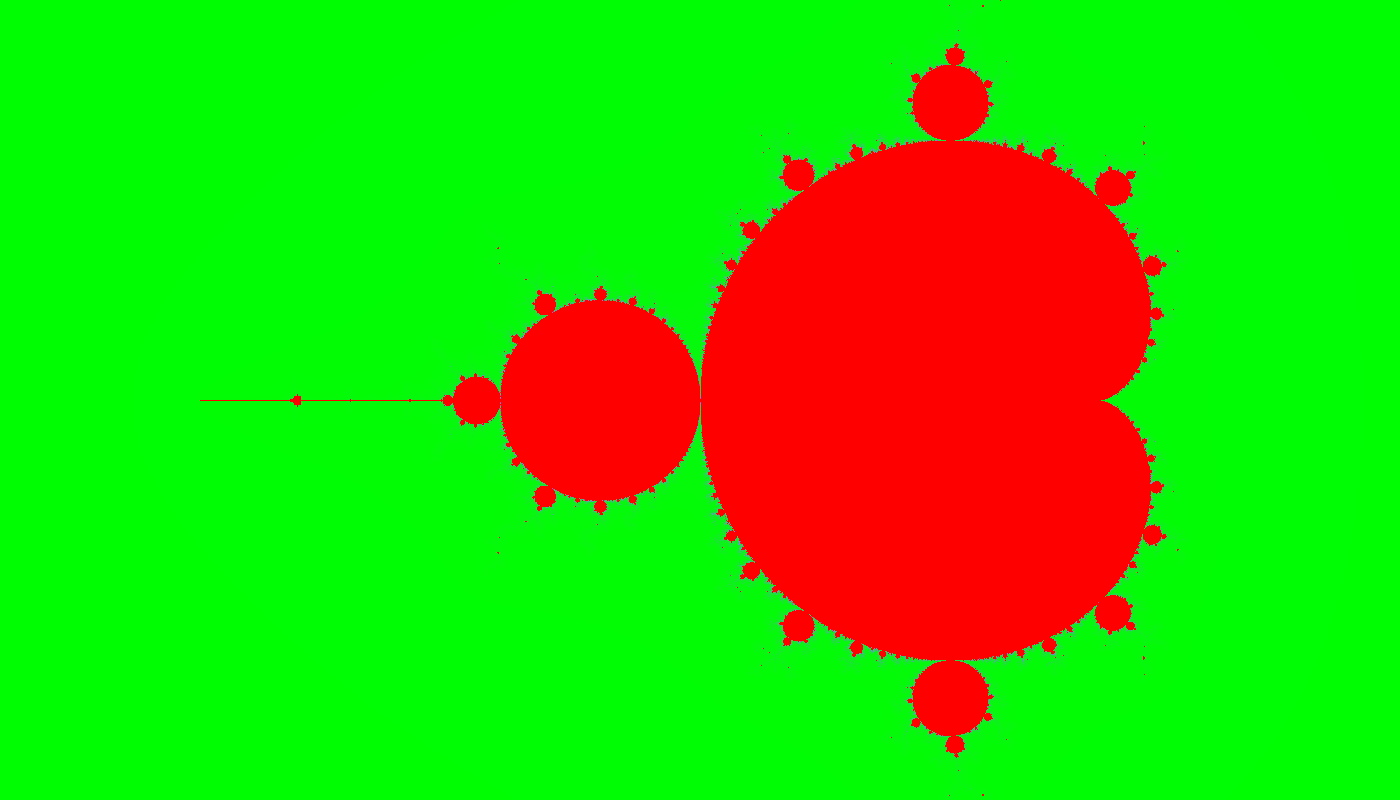

The performance story starts, like all good performance stories do, with a benchmark. I was looking for a benchmark to measure the allocation system, but also mix in a little calculation, in as few lines as possible – so I settled on the Mandelbrot program. But with a little “deoptimization” to use classes for the complex arithmetic rather than inlining the components. On a whim, I also decided to store the resulting image as an array of RGB classes, rather that an array of Int, which turned out to also have significant implications.

You can see the code in the haxe repo. You may note there are 2 versions controlled by a define, a class based one and an anonymous object (typedef) based one. The typedef option is for future optimization attempts. Also, the performance figures I will be talking about have the “SIZE” parameter increased to 40, to allow more accurate timing. The results are predictable, if not beautiful.

This benchmark is all about allocation and garbage collection (GC). The allocation part calls the object “new” routine to find some space on one of the memory blocks, and the collection part must scan the allocation space to reclaim object space that is no longer used. These phases have quite different optimization characteristics – the allocation part gets called literally millions of times, and therefore micro-optimizations at the code level may be appropriate, while the collection code get run less frequently, but must do quite a lot of work, so algorithmic optimizations might be more appropriate.

The initial results with hxcpp 3.2.102 were really quite bad. Hxcpp 3.2.102 takes approximately 35 seconds on a windows 32 build. Compare this to the node/js solution of 6.1 second. It should be noted that this test is measuring mostly allocations, and code base is small, so node can quite efficiently inline the code and use its highly-tuned single-thread allocation code. But still, it should be possible to improve things.

Before even looking at the low-level code, one thing that has a big effect on speed is the amount of free space available. The Gc allocates into its free memory, and when it’s full, it reclaims/collects the unused portions. The speed of the reclaiming depends mostly on the the amount of “live” memory after the collection. By increasing the amount of free memory, the time between collections increases, while keeping the collection time roughly the same, thereby improving the overall speed. Hxcpp uses a heuristic to determine the free space it should use depending on the “live” size at last collection. There was a fixed ratio in 3.2.103, but since then I have exposed the settings and increased the minimum working memory to 20M on desktop and 8M on mobile. This alone gives a nice improvement.

After creating a benchmark, the next step in optimization is to run a profiler. And the profiler did indeed show that most of the time was spent in the allocation code. It did not appear to be in one particular part of the code, but instead spread over the whole routine. This implies that I really need to get the line count down, and to that end I decided to simplify some the auxiliary data structures at the expense of memory. The hxcpp Gc uses conservative marking on the stack. That is, it scans the memory and looks for things that look like pointers to Gc objects. It then needs to know with 100% certainty that the thing pointed to is (or was recently) an actual object, otherwise the marking process could trash memory. The means that some extra information needs to be used to track this. Initially, I used what I thought was a clever trick to reuse the byte I was already using to indicate whether a line was marked as a head for a mini linked-list of live-objects. This worked and did not require extra memory, but the maintenance of the list complicated the allocation routine. I switched to using a extra bitmap, which uses 1 bit per 4 bytes of Gc memory to indicate whether an object starts at the particular location. This is 3% extra memory, but it greatly simplifies the allocation logic, allowing for a neater implementation.

The next point of simplification was pre-calculating the “gaps” in the Gc memory blocks. Initially, this was done in the allocation code, but by doing it in advance, it simplifies allocation code that is getting run millions of times. Theoretically, there are a similar number of operations going on here, but practically the separation makes the code much neater and therefore faster. Later, I moved this calculation to a separate thread so it can be done in parallel with the normal code.

I also rearranged the object header to allow the marking code to test whether an object needs marking with a single bit test, and therefore avoid an expensive marking function call in some circumstances. The header also includes a neater row count which makes the actual row marking faster.

These changes sped the allocations up considerably, but the profiler still showed most of the time running the allocation code. The main issue then became the function call depth. Modern c++ compilers can do a pretty good job at inlining code, but they are not magic, so some extra help is required. First a quick review of what happens when you call “new Complex” in haxe. Hxcpp generates a standard c++ “new” call, which calls into hx::Object “operator new” which is where the Gc memory allocation routine is called. C++ then initialises the object by inserting the vtable pointer and hxcpp then calls “__construct” on the object – which is the “new” function defined in haxe. It is split like this to allow the haxe code to call “super” in ways that c++ does not allow with its native constructors. The “operator new” code takes a parameter defining whether the resulting object contains pointers to other object – this allows the marking code to make some optimizations. In the original code, this then called an “InternalNew” function which checked the object size and delegated to either a thread-local allocator, or a large allocator. This allows independent threads to allocate at full speed without needing locks. The problem with this arrangement is that several function calls are required per allocation. The solution here was it inline quite a lot of code into the “operator new” function, and also to observe that haxe objects always allocate from the local allocator, so some some checks can be avoided. Inlining means that some of the guts of the allocator need to be exposed to the haxe code. However, this is kept under control by keeping the complex case (when there is not enough room) in the separate file, and only inlining the “easy” case, which is also helped by the simplifications made earlier. You can see the code here.

There are still a few things that could be done. There are some checks that could be avoided in single threaded app. Another idea I had was to move some of the housekeeping (setting of the header and start bits) to a separate thread to be done later. There are still 2 function calls going on here – the “operator new” (which gets inlined) and the “__construct” call (which does not). It should be possible to inline the construct call if it is simple enough.

These were the changes to the allocation code. As mentioned earlier, there were some improvements to the marking code. Additional things were also done in the collector. The reclaimed memory is cleared in a background thread. This is actually is quite a nice improvement because up to 7% of the time was spent doing the clear. One thing this particular benchmark highlighted was the marking of a single large array (the image array) actually got slower when using multi-threaded marking, due to a slight overhead. I’m not sure how applicable this is to general code, however there was a solution. The multi-threaded array marking code now splits large array marking up if there are idle marking threads.

So with all these improvements, did it beat the node/js speed? Just about – it was a close thing. One more change was really required – compile a 64 bit target instead of a 32 bit target. Then finally the time came in at 5.6 seconds, less than 1/6 the original time. Node is doing a pretty awesome job here and I’m sure it is only going to get better so it will take some work to keep up. If I change the benchmark to use member functions instead of static-inline functions, then hxcpp becomes significantly faster than node, which is nice to know. It should also be noted that I’m cheating a bit here by using multiple threads to get the job done. But I’m not above a bit of cheating.

This brings me to the Java target – 2 seconds. Wow. I’m not actually sure if it is doing any allocations here at all – maybe it has completely inlined the code. Or maybe it just really really good. That sets a seriously high benchmark

Is there still room for improvement? I think there is – particularly, a generational Gc would reduce the marking time to practically nothing in this case, but it requires a bit of effort on the haxe compiler side. Inlining the constructor for simple classes (like the “Complex” class in this code) should also be a nice win.

It has been an interesting experience getting the code faster. I think that it highlights one key thing – if you want the hxcpp code to run faster, then create a simple benchmark.